Table of Contents

- Context

- Design process and solution

- Key Metrics

Context

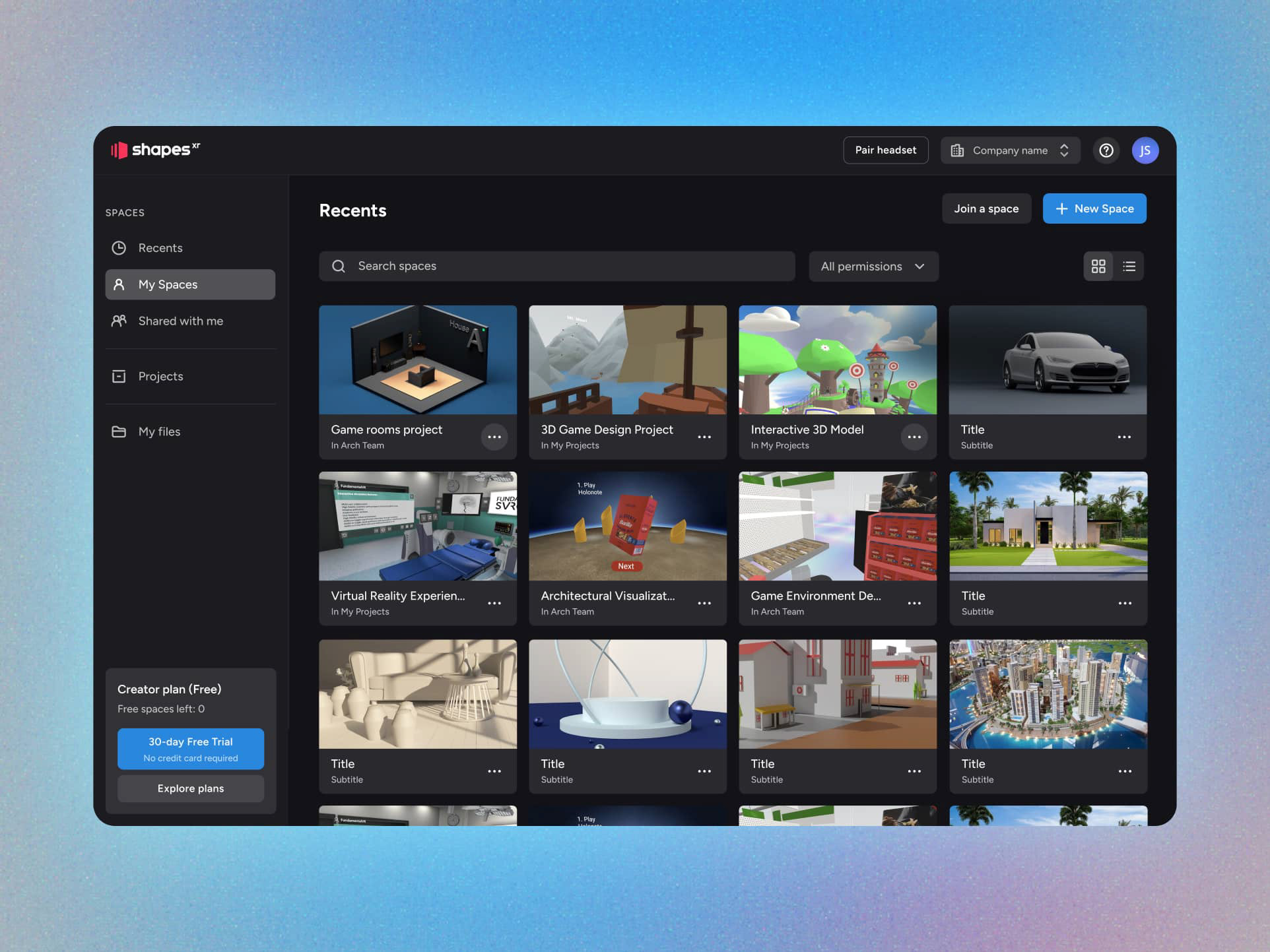

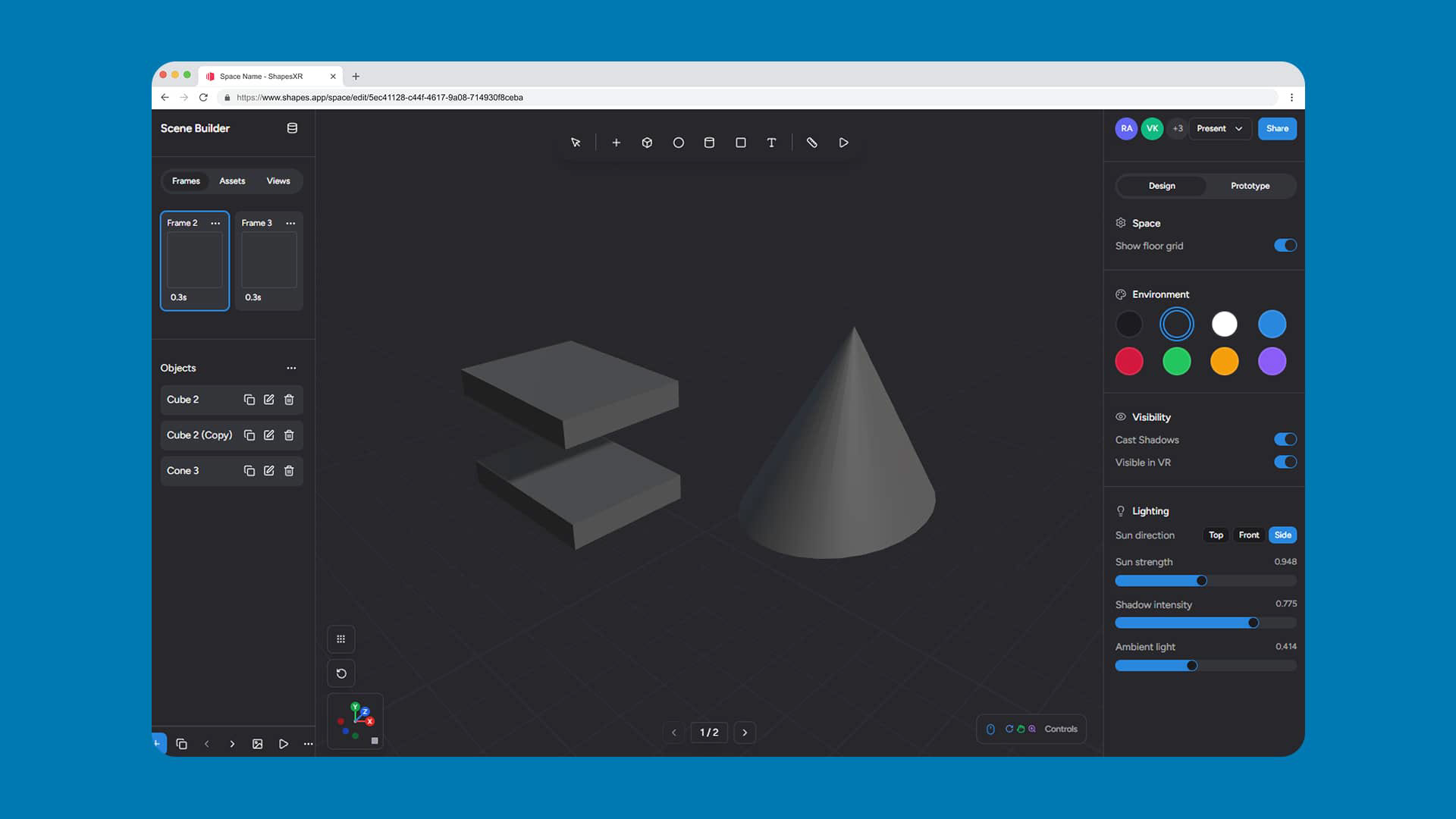

This is how AI helped me achieve a higher level of interaction polish and a smoother handoff to developers during the design process of this desktop web app. By creating a functional prototype capable of replicating even the most complex interactions—such as generating 3D primitives within the 3D canvas—I was able to validate and communicate design intent more effectively.

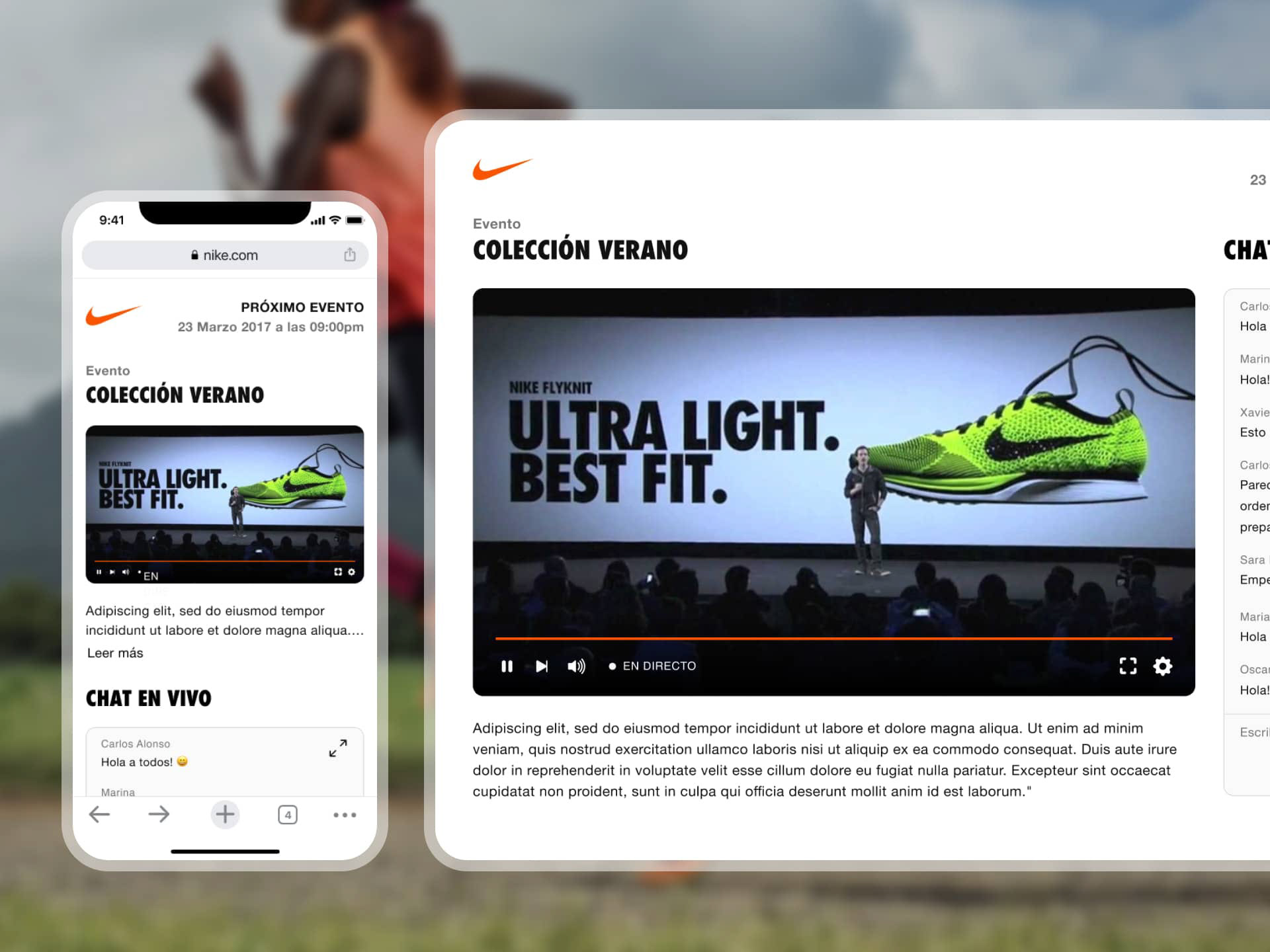

ShapesXR is an XR application that enables teams to design, co-create, and present directly in XR — often described as “the Figma for XR.”

As part of ShapesXR’s growth, the team identified the need for a desktop version of the XR app to lower the entry barrier posed by headsets. This version would allow users to present designs and collaborate from their desktops without needing to wear a headset, while also benefiting from the increased speed and flexibility that desktop environments provide.

As part of ShapesXR’s growth, the team identified the need for a desktop version of the XR app to lower the entry barrier posed by headsets. This version would allow users to present designs and collaborate from their desktops without needing to wear a headset, while also benefiting from the increased speed and flexibility that desktop environments provide.

Design process and solution

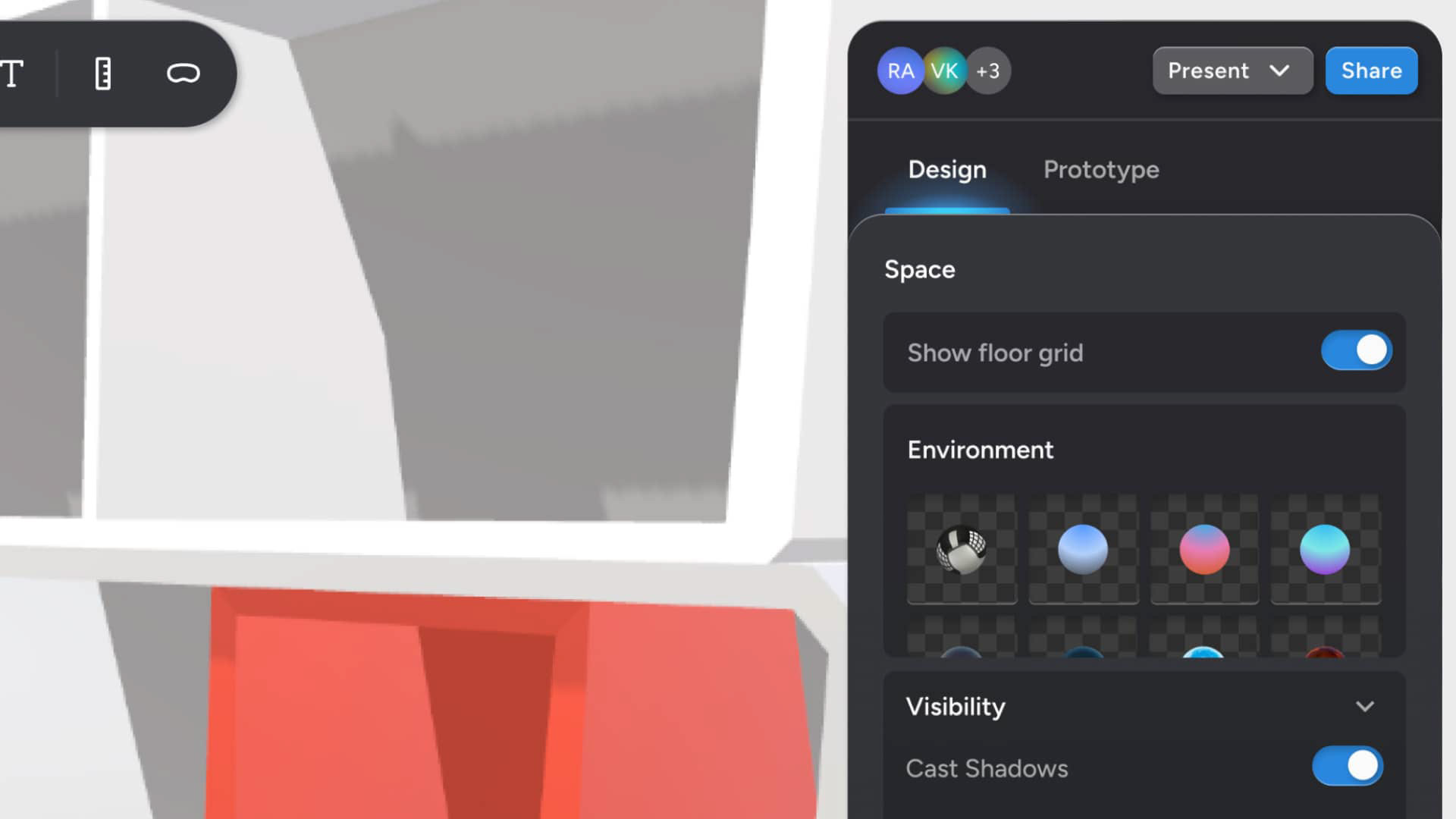

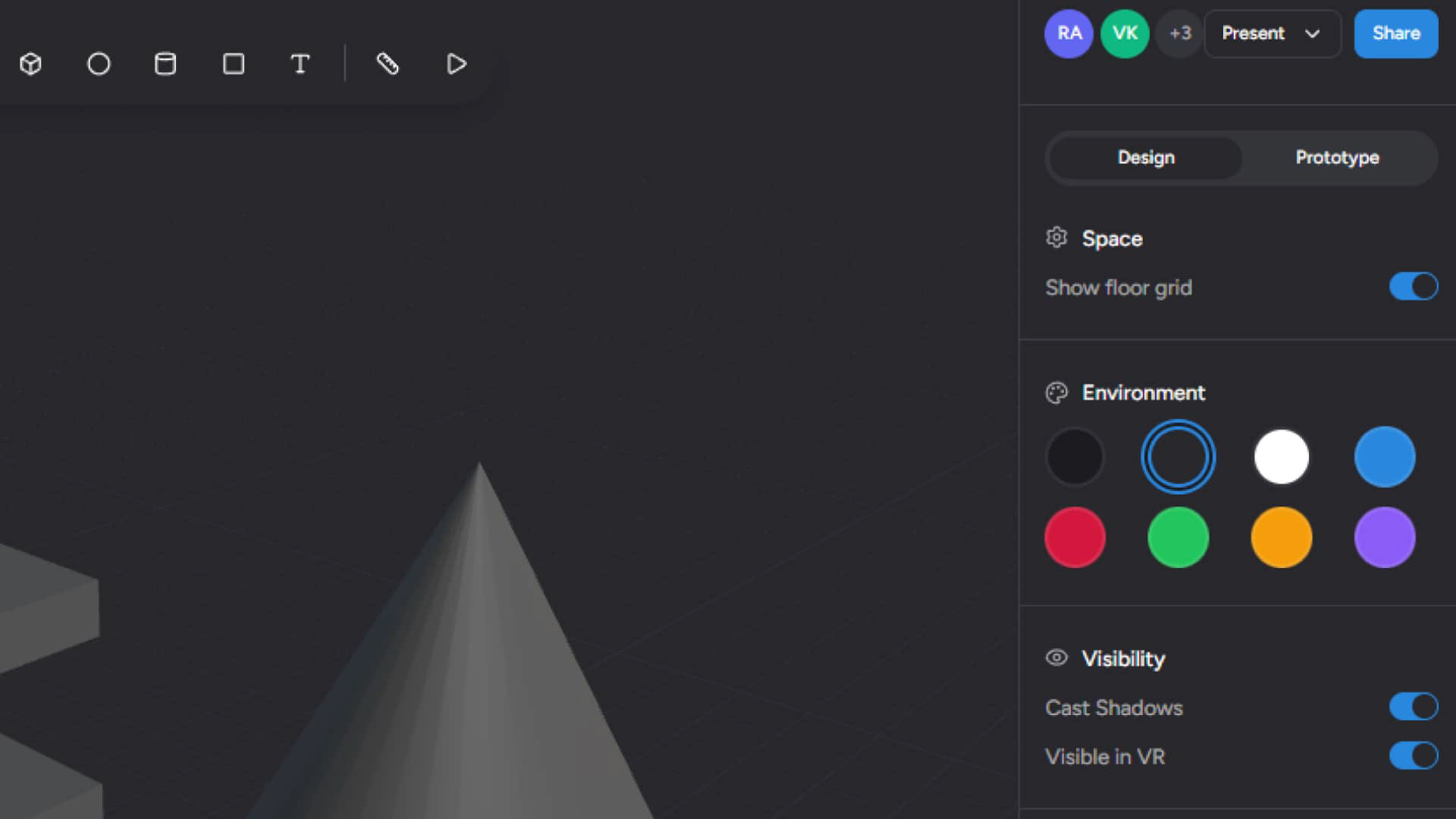

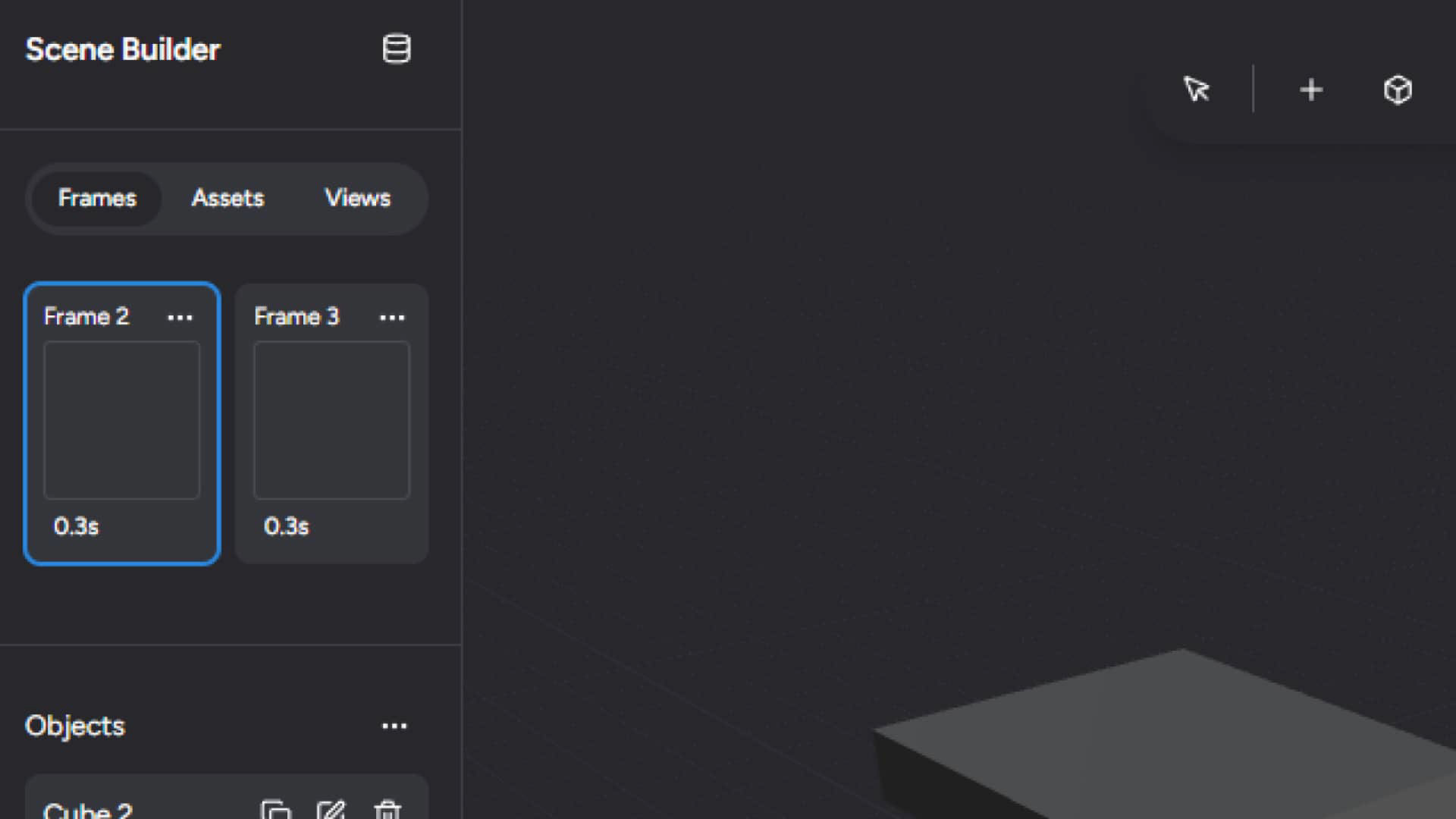

ShapesXR is an XR headset–first application, meaning its UI and overall architecture are designed specifically for immersive environments. The challenge was to bring all its features to a flat, 2D medium in the form of a desktop app. This required a new architecture, layout, and a set of custom UI components.

From a technical perspective, the ShapesXR app is built in Unity using Unity UI. As a startup, the goal was to make the process as efficient as possible by reusing existing Unity components and functionality. This approach allowed the team to deploy a desktop version faster, since most of the features were already implemented in Unity.

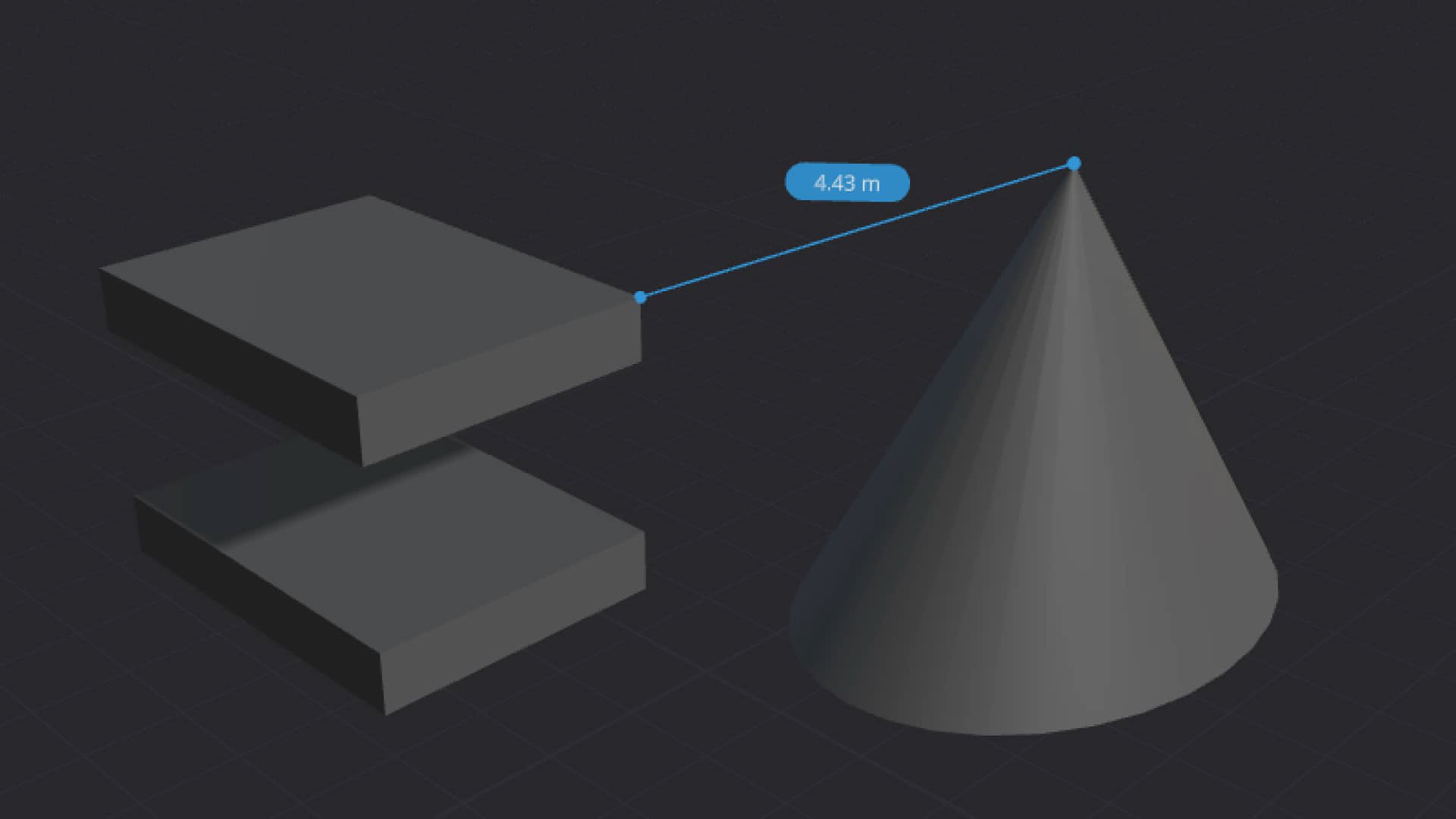

However, despite that strategic reuse, the two mediums differ so greatly that certain tools—such as the Measure Tool—required entirely new implementations. To provide developers with the most accurate prototype possible, I created a functional clone of the tool using AI. This approach brought complex interactions to life, enabling developers not only to understand and use them but also to actively participate in the design process itself.

As part of the process, I defined the estimated goals and what we would consider a successful launch—having at least 20% of power users using the web editor as a complement to the XR headset app during the first 3 months. I also specified the tracking events for developers to implement in Mixpanel, enabling us to monitor the results after launch. Check out the Key Metrics section to see how it went.

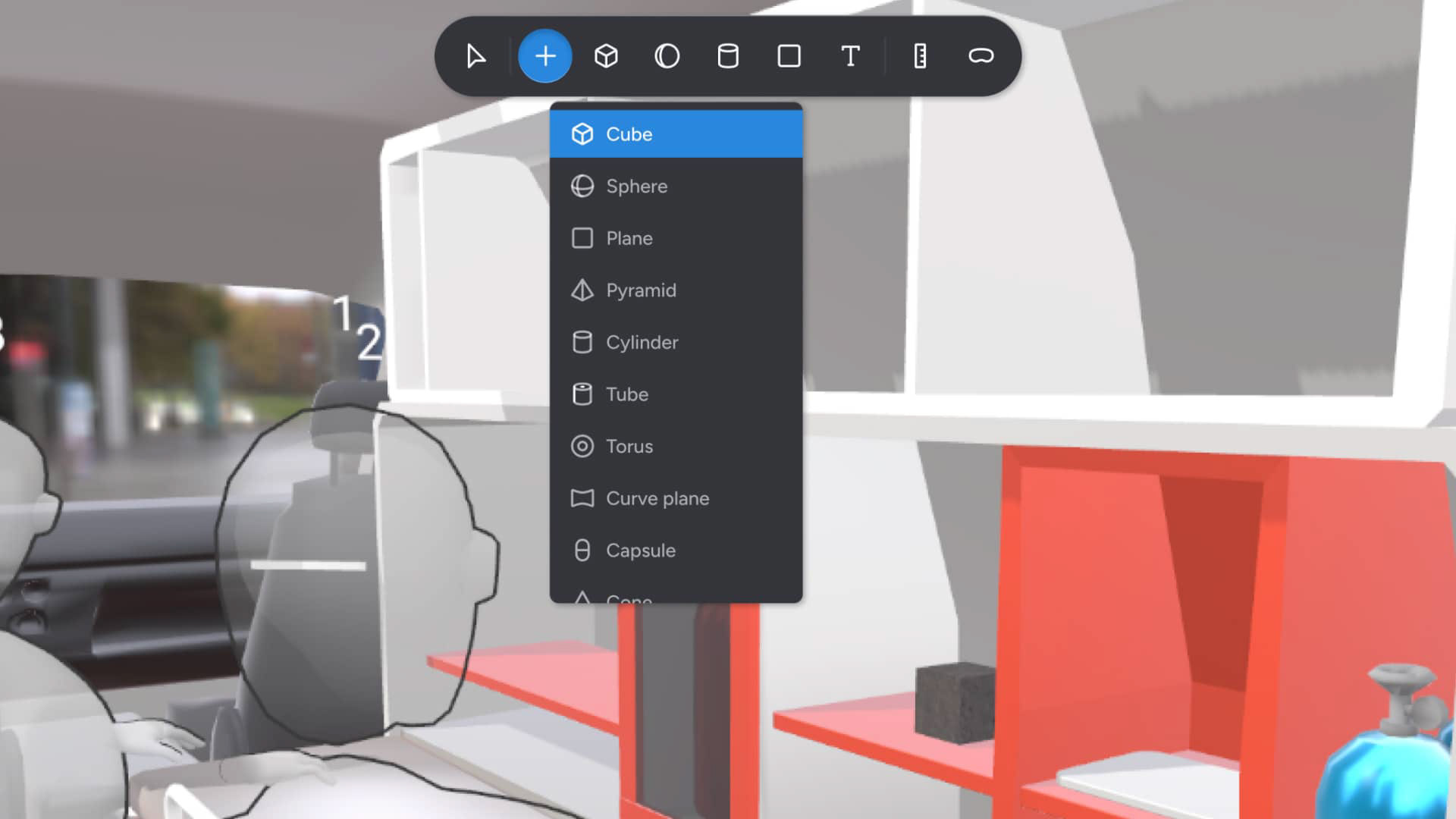

AI-powered clone

This AI-powered clone I created enabled quick testing and iteration of complex interactions, such as the measurement tool and the creation of 3D primitives.

This AI-powered clone I created enabled quick testing and iteration of complex interactions, such as the measurement tool and the creation of 3D primitives.

Key Metrics

We initially estimated that around 20% of power users would integrate the desktop app into their design workflow.

As a result, 26.3% of power users began using the web editor immediately, with a 21% retention rate by the second week. XR headset sessions did not drop significantly, indicating that users were adopting the web editor as a complement rather than a substitute—which was the intended outcome from the start.

Thank you for reading! 💙